GO Programming Basics

Import

-

Tree Shaking and it's application

-

Tree shaking

-

Static Analysis

-

Dead Code Elimination

-

Example in Popular Frameworks

-

React

-

Angular

-

Benefits of Tree Shaking

-

Reduced Bundle size

-

Improved Performance

-

Efficient Dependency Management

Named import

If you want to name your import to something, write the name just before the double quotes of that import.

import (

foo "net/http"

)

-

Notes: Go compiler and linker are smart enough to import only the required parts, i.e. only the parts that we have used in our program from those imported packages into the final executable and how that happens is through Tree Shaking.

-

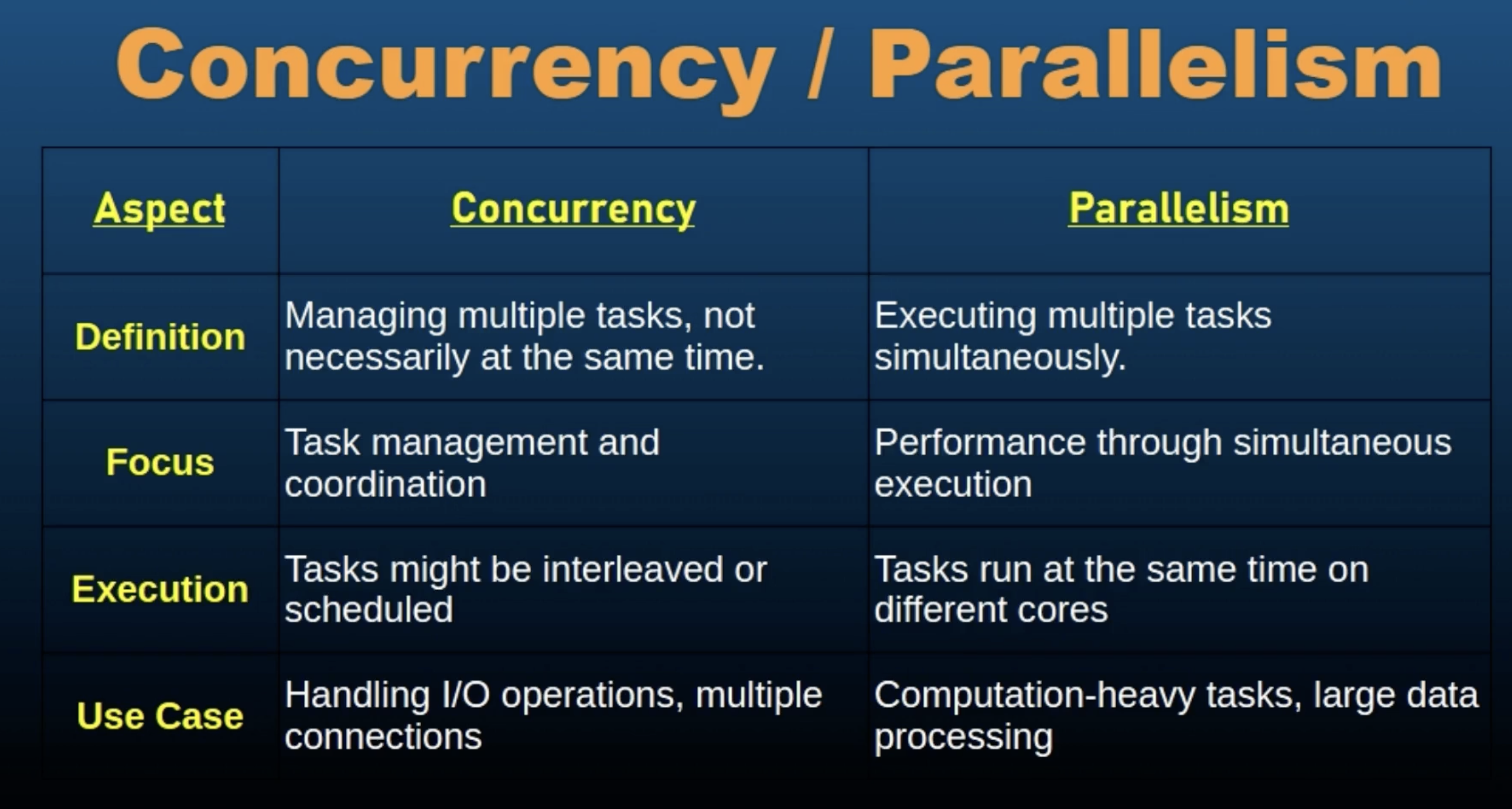

Tree Shaking : Technique used to eliminate the dead or unused code from the final executable or the final bundle, thereby reducing it's size and improving performance of the final bundle or executable.

-

During the build process, tree shaking statically analyzes the code base to determine which modules and functions are directly imported and used. Unused modules and functions identified during static analysis are labelled as

dead code. Tree Shaking then removes these unused segments from the final output optimizing the bundle or executable size. -

eg: React coupled with tools like Webpack and Roll-up employs tree shaking to remove unused components and utility functions from the Javascript bundle. And this optimization is crucial for large scale react applications.

-

Tree shaking minimizes the size of executables binaries or the final bundles which is critical for optimizing load times and improving runtime performance of our executable files. Smaller executables lead to faster load times and enhanced runtime efficiency benefitting both developers and end-users.

-

Developers can import the entire library without worry about overhead of unused code because tree-shaking trims the unnecessary parts during the build process.

-

The import statement in go plays a pivotal in integrating external functionalities while ensuring that only the necessary parts contribute to the executable.

Data Types

-

Integers

-

Floating Point Numbers

-

Complex Numbers

-

Booleans

-

Strings

-

Constants

-

Arrays

-

Structs

-

Pointers

-

Maps

-

Slices

-

Functions

-

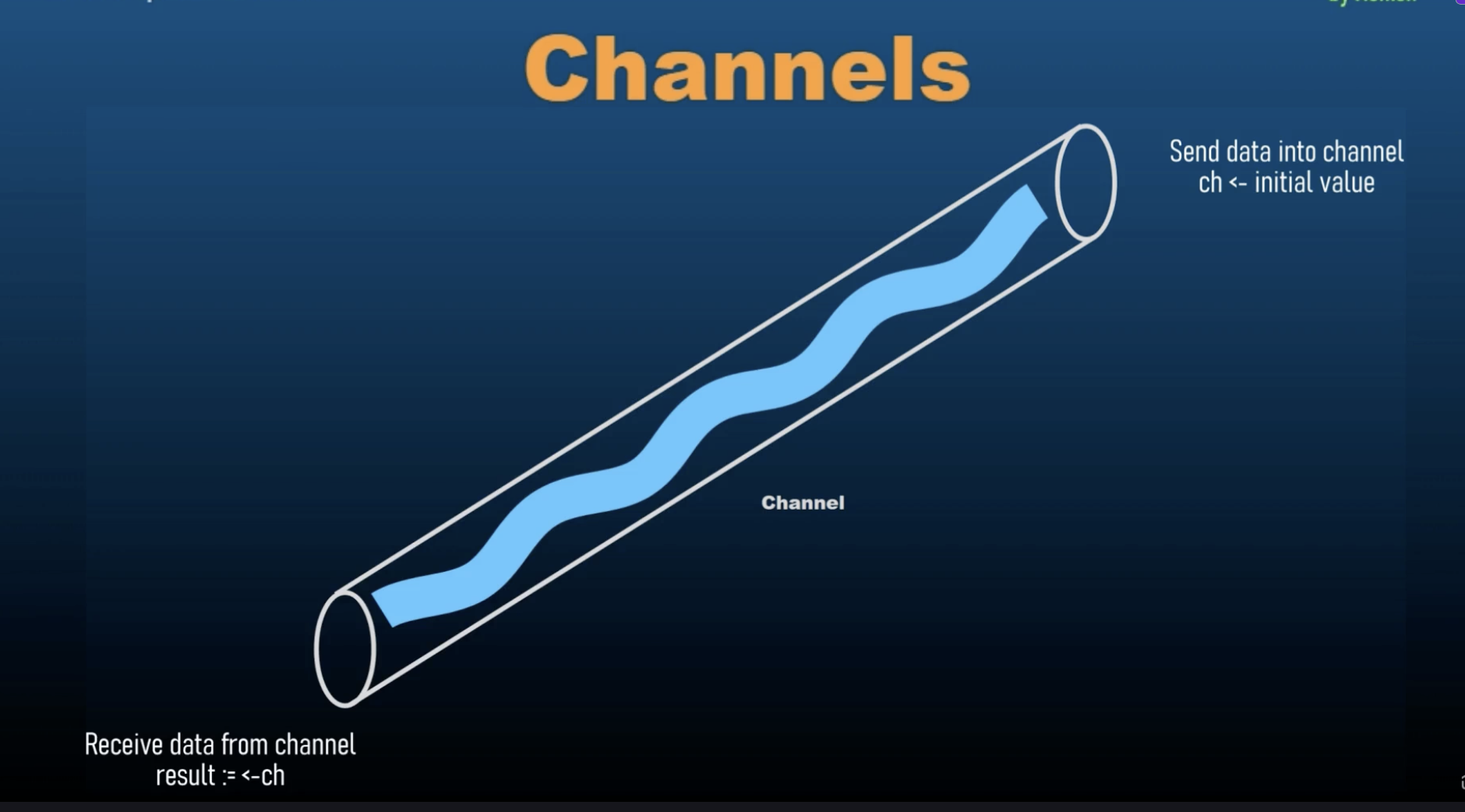

Channels

-

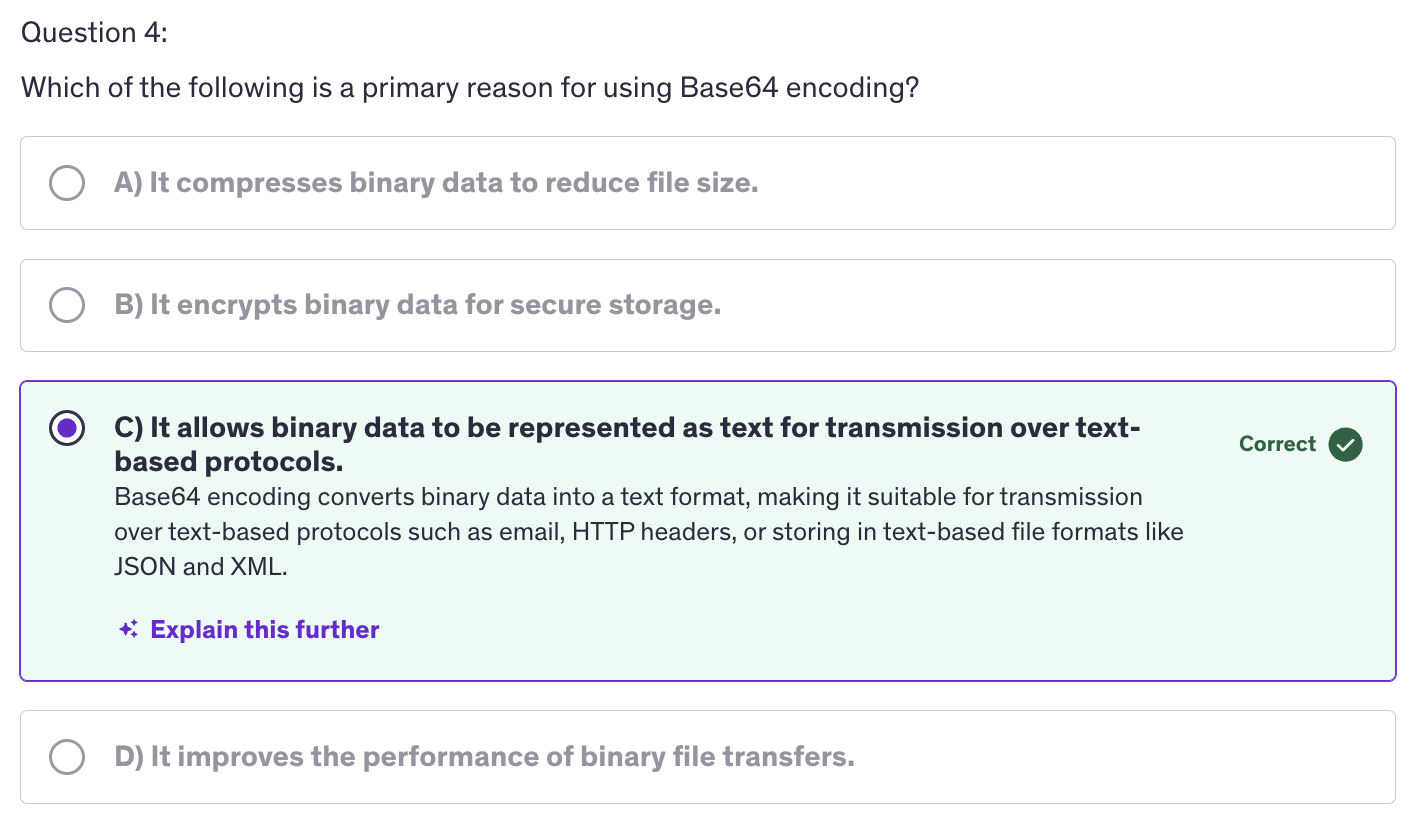

JSON

-

Text and HTML Templates

-

Variables declared without an explicit initialization are assigned a default zero value based on their type.

-

Numeric types are given a value of zero

-

boolean types are defaulted to False

-

String type is an empty string by default

-

pointers, slices, maps, functions and structs are initialized with

nilvalue.

Variables

-

the

typeof the is optional if we are initializing the variable otherwise we have to declare the variable with a particular type. -

we can use the gofer symbol (

:=) to initialize the variable.

count := 10

lastName := "Smith"

-

This is called type inference in go, allowing the variables to be initalized without explicitly specifying the type. The type is inferred from the assigned value.

-

Variables in go have

block scopemeaning that they are accessible only within the block they are declared. -

It's kind of a rule in Go that :

gofernotation can only be used within functions to declare and initialize variables locally. It is intented for local variables initialization within functions only. -

If we are making a package level variable (global variable) then we cannot use the gopher notation.

-

global variable is only limited to the package scope. Outside the package we cannot use that variable.

-

variables live within their scope.

-

Variables in go provide a flexible and powerful way to manage data within programs.

Constants

-

Constants must be initialized with values that can be determined at compile time. This typically includes literals and expressions that can be evaluated without runtime computations.

-

Go supports both

typedanduntypedconstants. -

Untyped constants are constants without a specified type until they are assigned to a value. They can be used in contexts that require a specific type, and go will automatically infer the approproate type.

-

NOTE: There is no short declaration for constants.

-

const block: We can group related constants together using this const block to make our life easier. -

constants in go provide a mechanism for defining immutable values that remain consistent throughout the execution of the program.

Arithmetic Operators

-

Basic Arithmetic Operators

-

Addition +

-

Subtraction -

-

Multiplication *

-

Division /

-

Remainder (Modulus) %

-

Operator Precedence

- Parentheses ()

- Multiplication *, Division /, Remainder %

- Addition +, Subtraction -

-

Overflow

-

Underflow

-

Why be mindful of overflow and underflow ?

-

Program Stability

-

Data Integrity

-

Type Safety

-

Mitigation Strategies

-

Range Checking

-

Type Conversion

-

Error Handling

-

Be mindful of potential overflow and underflow issues, especially when dealing with large numbers.

-

Overflow occurs when the result of a computation exceeds the maximum value that can be stored in a given numeric data-type. Overflow results in the value wrapping around to the minimum value for signed integers or causing unexpected behaviour for unsigned integers. eg: if you add two large integers and the result exceeds the maximum value represented by that integer type, overflow occurs.

-

Similarly, Underflow occurs when the result of a compilation is smaller than the minimum value that can be stored in a given numeric data type. This is more relevant for floating point numbers, where underflow can lead to loss of precision or significant digits in calculations involving very small values.

-

This needs to be taken care of when we are working on applications that are involved in scientific calculations, and where calculated values are big numbers.

For Loop

-

For loop is a fundamental control structure that allows you to repeatedly execute a block of code based on a condition.

-

Syntax

for initialization; conditon; post {

// Codeblock to be executed repeatedly

}

-

Initialization: Executed before the first iteration. Typically used to initalize loop variables.

-

Conditon: Evaluate before each iteration. If false the loop terminates

-

Post: Executed after each iteration. Usally increments or updates loop variables.

for i=1; i<=5; i++ {

// Code block to be executed repeatedly

}

-

Break: Terminates the loop immediately, transferring control to the next statement after the loop.

-

Continue: Skips the current iteration and moves to the next iteration of the loop.

-

%v-> general value%d-> specific integers

Operators

-

Logical Operators

-

! : logical NOT

-

|| : logical OR

-

&& : logical AND

-

Bitwise Operator

-

& : bitwise AND

-

| : bitwise OR

-

^ : bitwise XOR

-

&^ : bitwise AND NOT

-

<< : left shift

-

: right shift

-

Comparison Operators:

-

== : equal

-

!= : not equal

-

< : less than

-

<= : less than or equal to

-

: greater than

-

= : greater than or equal to

Conditions: if else

- If else condition are essential for controlling the flow of execution based on different conditions. They allow you to create decision making logic within your programs, enabling you to execute specific block of code based on whether certain conditions evaluate to true or false.

Conditons: switch

-

The switch statement provides a concise way to evaluate multiple possible conditions against a single expression. It simplifies the syntax compared to using multiple if and else if statements, making the code more readable and maintainable when dealing with multiple branching conditions.

-

Syntax : Switch case in case (switch case default) (fallthrough) : no break statements are needed in switch cases.

switch expression {

case value1:

// Code to be exceuted if expression equals value1

fallthrough -> goes to the next case after evaluating this case.

case value2:

// Code to be exceuted if expression equals value2

case value3, value4, value5:

// Code to be exceuted if expression equals value3

// mutliple conditions

default:

// Code to be exceuted if expression does not match any values

}

-

In Go, switch case can also be used with type assertions to switch on the type of an interface value.

-

x interface{}means x can be of any data-type. -

As per Go compiler, we cannot use

fallthroughwhen we are using atypeswitch.

Arrays

-

Arrays are fundamental data structures that allow you to store multiple values under a single variable. Understanding array is crucial as they provide a way to manage and manipulate ordered data efficiently.

-

Syntax :

var arrayName [size][elementType]

-

size is the number of elements that the array can hold. It's a fixed size, it's not variable. That's why we have to declare it beforehand.

-

elementType is the type of elements that the array can store.

-

In Go, arrays are value types means when you assign an array to a new variable or pass an array as an a rgument to a function, a copy of the original array is created and modifications to the copy do not affect the original array. So if we modify the copied array, it does not affect the original array.

-

We can iterate through an array using a

rangebased iteration.rangeis a keyword in go, and any collection that we have, we can iterate over that using therangekeyword. -

If we want to discard the index, we can use

_(underscore). Underscore means that we are discarding that value. Underscore in Go is known asblank identifer.

numbers := [5]int{10,11,12,13,14}

for _ , value := range numbers {

fmt.Printf("Value : %d\n",value)

}

-

Underscore is a

Blank Identifier, used to store unused values. Underscore in Go has several important uses. -

Just as we saw above, if we don't want to use any value that is being returned from anywhere be it a range or a function that returns a value, but we don't want to use one value from multiple values being returned by the function. So in that case we can assign underscore to that value so that we will not have to use that and we will not get anerror that even if we let's say store in

ibut not usedilater. -

We can also do underscore to avoid compiler errors of a variable not being used for temporary testings.

b := 2 _ = b -

We can determine the length of an array using the

len()function with the arrayName as an argument .len(arrayName) -

Go supports multi-dimensional arrays which are array of arrays. They are useful for representing matrices and other structured data.

-

If we were to use the original array in copied array, we would have to use pointers and addresses.

originalArray := [3]int{1,2,3}

var copiedArray *[3]int

copiedArray = &orginalArray

-

So copiedArray carries the address where an array of three integers exists and if we have not initialized the array, it contains three zero values.

var copiedArray *[3]int -

Assign the copied array the address where the original array is by using the ampersand sign (

&) with the originalArray.

Slices

-

Slices are dynamic flexible views into arrays. They provide a more powerful and convinient interface to sequences of data compared to arrays.

-

Slices are references to underlying arrays. They do not store any data themselves but provide a window into the array's elements. Slices can grow and shrink dynamically.

-

We have the same function

len()which can check the length of the slice. -

We also have a

cap()function which can check the capacity of the slice. It will check the number of elements in the underlying array, starting from the slices' first element. -

we can also initialize slices using

make.

slice := make([]int, 5) // slice of capacity 5

- We convert an array into a slice.

a := [5]int{1,2,3,4,5}

slice = a[1:4] // element of index 1 to index 4 but not including 4 -> [2,3,4]

- We can also append more elements to a slice.

slice1 := []int{1,2,3,4}

slice1 = append(slice, 5,6,7,8)

- We can also copy a slice.

sliceCopy := make([]int, len(slice))

copy(sliceCopy, slice)

-

Slices also have a concept of

nilslices. A nil slice has a zero value and does not reference any underlying array. It is actually blank. -

we can also iterate over slices using range based loops.

-

The slices package also contains many utility functions which are useful for our day to day programming.

-

using

Equal()to compare two slices for equality.

if slices.Equal(slice1, sliceCopy){

fmt.Println("slice1 is equal to sliceCopy")

}

- slices also support slice operator. Syntax :

slice[low:high]

slice2 := slice1[2:4]

-

A slice once initialized is always assosciated with an underlying array that holds it's elements. A slice is a reference to an underlying array that holds the actual elements. A slice therefore shares storage with it's array and with slices of the same array. By contrast distinct arrays always represent distinct storage.

-

The array underlying a slice may extend past the end of the slice and the capacity is a measure of that extent. So the capacity of a slice is the sum of the length of the slice and the length of the array beyond the slice.

-

So it's not the capacity of the underlying array. It is the capacity of the slice that it can hold. And because the slice is started at a later point, it started at a different index, not at the index zero but at a different index, it may have a capacity which is lesser than the original array. But if we are truncating the slice before the end of the array, it will still count the elements that are past the end of the slice. That's we have the capacity of out

slice2as 6. -

In conclusion, slices in Go provide a powerful mechanism for working with collections of data, offering flexibility, efficiency, and ease of use compared to traditional arrays. They allow dynamix resizing and provide a powerful operations for manipulating sequences of elements.

Maps

-

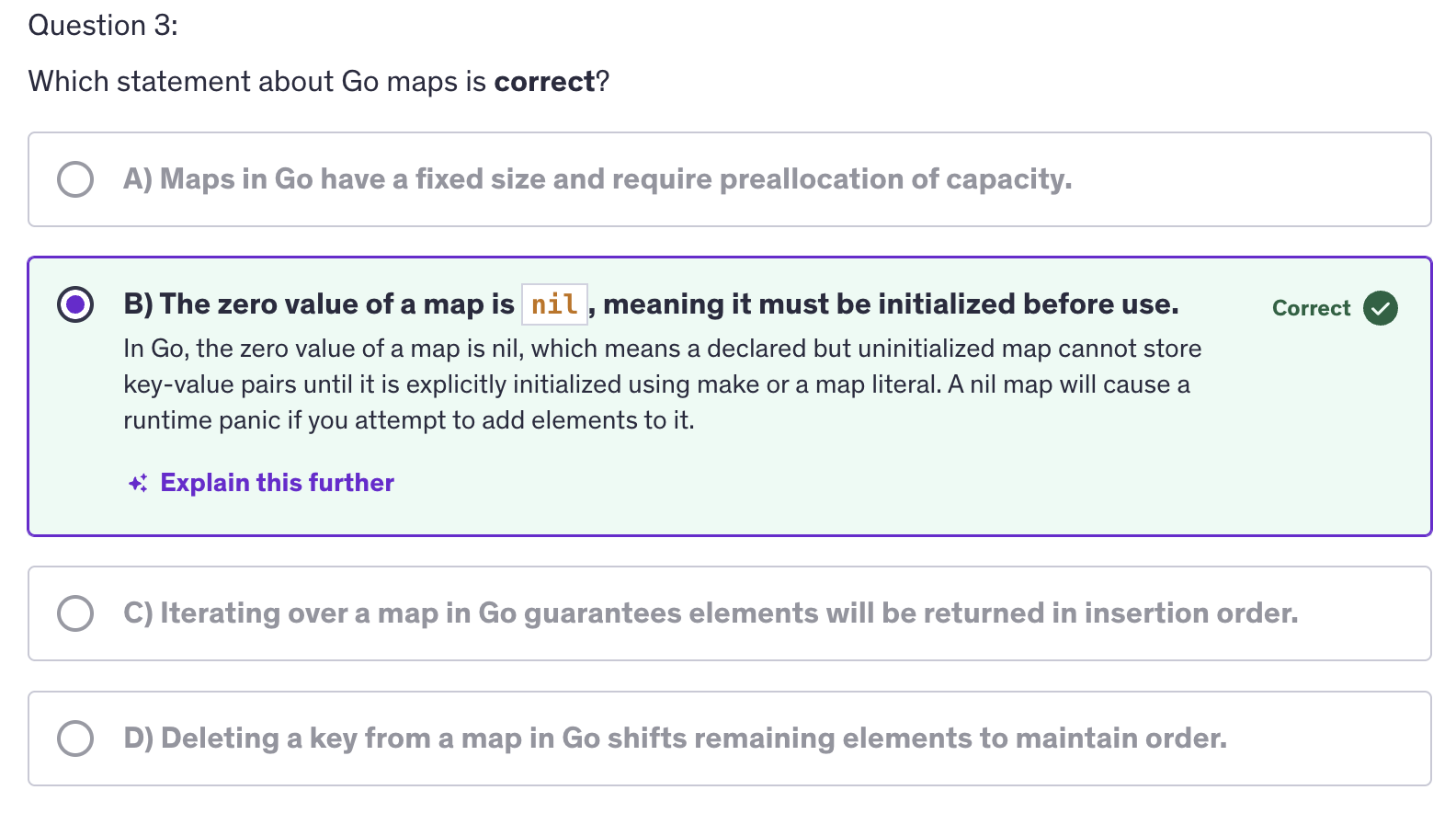

Maps are a built in data-structure that assosciate keys with values. They are like dictionaries in other programming languages, and provide an efficient way to look-up data by a key.

-

Maps provide an efficient way to store and retrieve key value pairs. Each key must be unique within the maps and the keys are typically of a comparable type, like strings, integers.

-

Maps are unordered collections of key-value pairs, meaning that there is not guaranteed order when iterating over them.

-

3 ways to create a map

1. var mapVariable map[keyType]valueType

2. mapVariable := make(map[keyTpe]valueType)

3. // Using a Map Literal

mapVariable := map[keyType]valueType {

key1: value1,

key2: value2,

key3: value3

}

-

In case of a non-existent key, we get a zero value. If the key doesn't exist the zero value of the value type is returned.

-

If we want to delete a key-value pair, use the

delete()function.

delete(myMap, key)

-

If we want to completely remove all the key-value pairs then we use the

clear()method. -

We get two values when accesing maps my keys. the first one is the

valueassociated with thatkeyand the second is an optional usable value isboolwhich indicates whether the key is present or not. useokto represent the 2nd optional value i.e. true or false, it's a convention.

myMap := make(map[string]int)

myMap["key1"] = 9

myMap["key2"] = 20

value, ok := myMap["key1"]

fmt.Println(value)

fmt.Println(ok) // returns true

- Maps also have an equality check.

if maps.Equal(myMap1,myMap2) {

// Code block to be executed when both maps are same

}

- If we want to iterate over the map, we use a for loop with

range.

for key, value := range myMap{

fmt.Println(key, value)

}

-

In real world scenarios, you may be required to only use the values and discard the keys present in the map. So in that case we can use underscore(_) to discard keys.

-

If we have a map that hasn't been initialized but only declared, then it is initialized to a nil value. The zero value of a map is

nil. -

Similar to arrays and slices we have

len()function to get the length of the map. -

We have the concept of nested maps where an outer map can have maps embedded inside it.

myMap5 := make(map[string]map[string]string)

myMap5["map1"] = myMap4

Range

-

The

rangekeyword in go provides a conventional way to iterate over various data structuress like arrays, slices, strings, maps and channels. It simplifies the process of iterating and accessing the elements without needing to deal directly with indices or iterators. -

characters are called

runesin Go. -

Few things to keep in mind while using range :

-

rangekeyword operates on a copy of the data structure it iterates over. Therefore modifying index or value inside the loop does not affect the original data structure. -

For arrays, slices and strings, range iterates in order from the first element to the last.

-

For maps, range iterates over the key-value pairs but in an un-specified order.

-

For channels, range iterates until the channel is closed. So if the channel is not closed, range will keep iterating over that channel.

-

If we are not using any value, then we can use underscore, the blank identifier to prevent memory leaks by allowing Go's garbage collector to reclaim the memory.

Functions

- Functions are fundamental building blocks in go, encapsulating reusable code blocks that can be invoked multiple times with different inputs. They play a crucial role in structuring go programs by promoting modularity and code reusability.

func <name> (parameters list) returnType {

// Code Block to be executed

return value

}

-

A function name should be a valid identifier and should follow go naming conventions. When we are making a public function, it should start with an uppercase letter and if it's a private function, then it needs to start with a lowercase.

-

example of public function :

Println() // -> under the fmt package

-

returnType specifies the type of the values returned by the function. We also have an option of returning multiple values and it is one of the unique aspects of Go language and a very very useful feature to make highly efficient code base.

-

Arguments that are passed to afunction are copied into the function's parameters. Modification to the parameters inside the function do not affect the original arguments, so never expect the original argument to be updated if the parameters are updated inside the function.

-

Another type of functions is

Anonymous Functions. We can call themclosuresorfunction literals. These are functions defined without a name directly in line where they are used.

func(){

fmt.Println("Hello, Anonymous Function")

}

or,

greet := func(){

fmt.Println("Hello, Anonymous Function")

}

greet() // Now greet becomes the function, so we can execute it like a function.

-

We can use functions as types and functions in Go can be assigned to variables passed as arguments to other functions and returned from functions, making them a first class object or a first class citizen.

-

So the concept of first class objects/citizens in programming refers to entities that have no restrictions on their use and can be treated uniformly throughout the language. When an entity is a first class citizen, it means you can perform a wide range of operations on it, just as you would with basic data types like integers or strings. These operations typically include passing as arguments, returning from functions, assigning to variables or storing the data structures.

-

So you can pass the entity as an argument to functions. That means, if the functions are first class objects/ citizens, they can be returned from another functions.

-

Similary we can assign the entity to a variable. A first class citizen can be assigned to a variable.

-

And you can also store the entity in data-structures like arrays, lists or maps.

-

We can use functions in any capacity that we want.

Multiple Return Values

-

In Go, functions can declare multiple return values by listing them in parentheses after the parameter.

-

The biggest benefit of having mutlitple return values is error handling because one of the values can be an error. Useful in bigger projects like making an API.

-

We can use named returns also. Eg:

func divide(a,b int)(quotient int, remainder int){

quotient = a/b

remainder = a%b

return

// We don't to specify quotient and remainder

// in the return statement coz go compiler is

// smart enough to understand that. Now that

// you have mentioned quotient and remainder in

// the return parentheses section, it will know

// that it have to return these variables.

}

Variadic Functions

-

Variadic functions in Go allow you to create functions that can accept a variable number of arguments. This flexibility is particularly useful when you want to design functions that can handle a varying number of inputs without specifying them individually.

-

In Go, variadic functions are defined by prefixing the type of the last parameter with an ellipsis (

...). -

Syntax:

// ... Ellipsis

func functionName(param1 type1, param2 type2, param3 ...type3) returnType {

// function body

}

param3 with ellipsis type is called a variadic parameter.

-

variadic parameter is a list, so when we are iterating over a list it will have an index and a value.

-

Variadic function is something that you will be using a lot in real-world scenario.

-

Variadic parameter must be the last parameter in the function signature. They cannot come before any other parameter. Regular parameters will be taking precedence over variadic parameters while declaring them in the parameter list.

-

To unpack a slice we use ellipsis(...) operator which destructures the slice into individual elements.

numbers := []int{1,2,3,4,5,9}

total := sum(numbers...) // passes sum(1,2,3,4,5,9) to the function sum

- In conclusion, variadic functions provide a flexible way to define functions that can accept a variable number of arguments of a specific type. They are particularly useful when dealing with functions that need to handle different numbers of inputs dynamically.

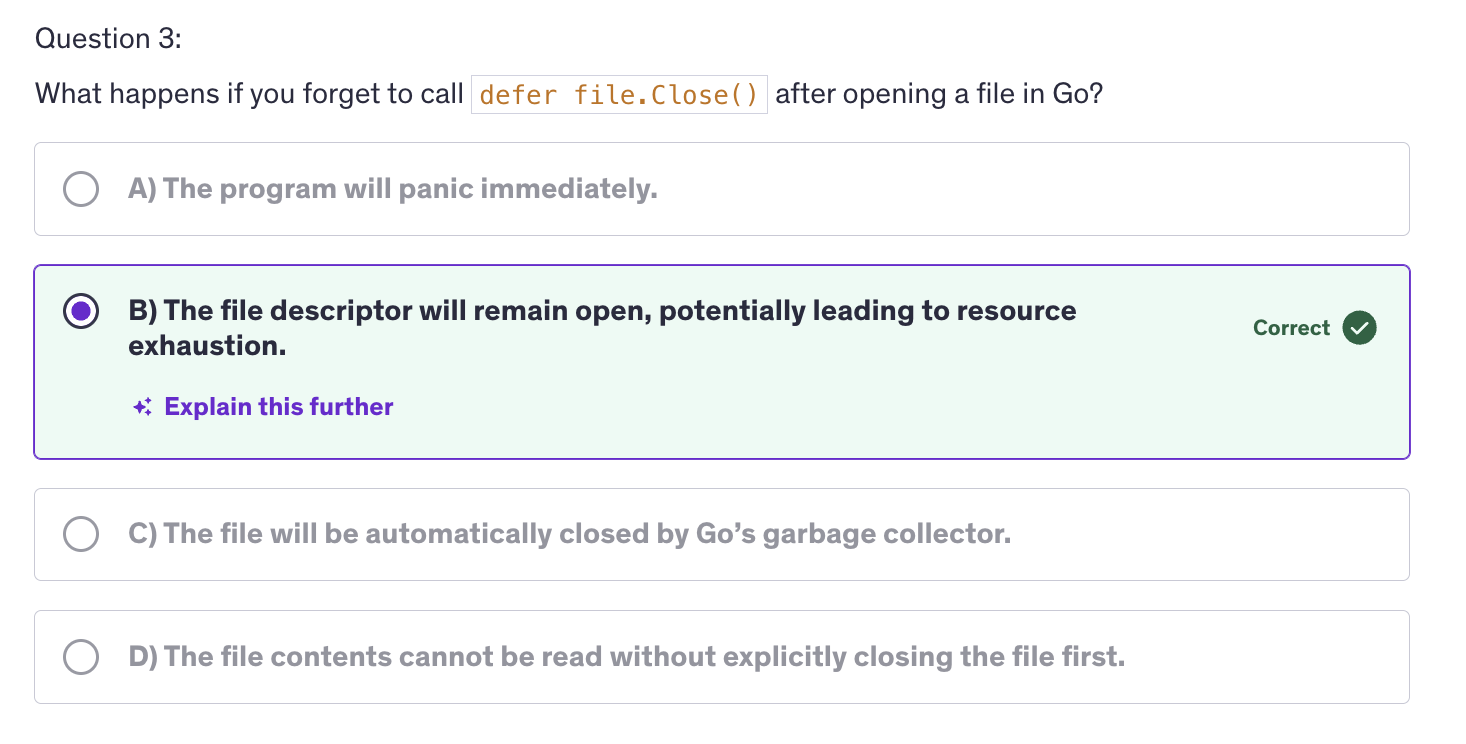

Defer

-

In go differ is a mechanism that allows you to postpone the execution of a function until the surrounding functino returns. It's a useful feature for ensuring that certain cleanup actions or finalizing tasks are performed.

-

A defer statement is a function whose execution is deferred to the moment the surrounding function returns, either because the surrounding function executed a return statement reached the end of it's function body or because the corresponding go routine is panicking.

-

Go routines are functions which run in the background, which are running concurrently in the background and they are not the part of the main thread. So it is a little like async / await in NodeJS.

-

Any function which is a go-routine is thrown to the back so that it finished of it's work, not in main thread, not blocking the main thread but in the background, and then comes back and joins the main thread once it's finished.

-

So anything with defer, any statement or any function which has a defer keyword as it's prefix will be deferred till the end of that function.

-

We can also have multiple deferred statements in a function, and they will be executed in a last in - first out order when the function returns.

-

arguments to differed functions are evaluated immediately when the differ statement is encountered. So just because the defer statement gets executed at the end doesn't mean that it is getting evaluated at the end.

-

Defer Practical use cases :

-

Resource cleanup

-

Unlocking Mutexes

-

Logging and Tracing

-

Best Practices

-

Keep Deferred Actions Short

-

Understand Evaluation Timing

-

Avoid Complex Control Flow

-

Defer is commonly used to ensure that resources like files or database connections are closed after they are opened.

-

When using Mutexes to synchronize GoRoutines, defer can be used to ensure that a mutex is unlocked even if a function panics.

-

Defer functions are also useful for logging and tracing entry and exit points of functions.

-

Defered functions should be short and simple to avoid unexpected behaviour and to keep the function's logic clear. Be aware of when arguments to defered functions are evaluated to prevent unintented consequences. And using defer in loops or nested functions can lead to subte bugs if not handled carefully.

-

defer is something like finally block in try-catch-finally in other languages. There are certain cleanup activities that we delegate to defer and finally as well. Defer ensures that critical actions are performed in a predictable manner regardless of how functions exit which is especially important for maintaining code realibility and readability.

Panic

-

In Go panic is a builtin function that stops the normal execution of a function immediately. When a function encounters a panic, it stops executing it's current activities, unwinds the stack and then executes any deferred functions. This process continues up the stack until all functions have returned, at which point the program terminates.

-

A panic is typically used to signal an unexpected error condition where the program cannot proceed safely.

-

The syntax of a panic function is called with an optional argument of any type, which represents the value associated with the panic.

panic(interface{})

-

Interface means you can input any value of any type as an argument for this function.

-

If a function gives flexibility to input any value of any type then the argument type would be interface.

-

Defer will execute when the function returns a value, but it will also execute even when the function is panicking.

-

As soon as we encounter panic, anything after that will not be executed. As soon as we panic we are exiting out of the function. So all the statements after panic will not be executed. Anything after panic will not be reached by the runtime.

-

Panicked only after running the defer functions. Once all defer functions are executed the program terminates with the panic message.

-

Misuse of panic can lead to unpredictable behavious and it should be avoided in scenarios where regular error handling suffices.

Recover

-

recover is a built in function that is used to regain control of a panicking go routine. It's only useful inside the defer functions and is used to manage the behaviour of a panicking go-routine to avoid abrupt termination.

-

A panic is a built-in function that stops the ordinary flow of control and begins panicking. When the function panic is called, the current function stops execution and any defered functions in that function are executed and then the control returns to the calling function. This process continues up the stack untill all the functions in the current go routine have returned at which point the program crashes and prints the panic message.

-

Recover is a built-in function that stops the propagation of a panic and returns the value passed to the panic call. When used in combination with defer, recover can be used to handle or log error gracefully and allow and allow the program to continue executing. SO when we use recover, we will continue to execute our program. It will not crash. However we will be able to log any error that happened when the program panicked.

-

The recover function is called inside the defer function.

-

The defer keyword schedules a function to be executed just before the surrounding function. The

process()function in our code returns regardless of whether it returns normally or due to an error. But defer function will be executed just before this function. Theprocess()function returns. -

If there is no panic, recover returns

nil. So if recover is not returning nil that means a panic happened. So as soon as there is panic, recover returns the panic value. -

So if it was an API then it would continue to run and it would not shut down abruptly. If we are using recovery mechanism, then our API, our gRPC API or our rest API will not shut down.

-

So what we actually do in recover is that instead of printing this to the console, so we will pass this error message to our error event handling mechanism. Or we will log this into a logger and we will log these messages along with more details like on which API endpoint was the error made, which user was logged in when this error was made, timestamp of the error, etc.

-

Practical Use Cases :

-

Graceful recovery

-

Cleanup

-

Logging and Reporting

-

Best Practices

-

Always Use with Defer

-

Avoid Silent Recovery

-

Avoid Overuse

-

We use recover to perform cleanup operations like closing files, releasing resources in a defer function that uses recover to handle panics and most importantly logging and reporting. Log and report panics to understand and diagnose unexpected errors in production systems without halting the application.

-

Panics and recover should be used sparingly and only for exceptional unrecoverable errors. Normal error handling with return values or errors should be used for expected error, so do not overuse panics and recover.

Exit

-

In Go programming language,

os.exit()is a function that terminates the programming immediately with the given status code. It's useful for situations where you need to halt the execution of the program completely, without defering any functions or performing any cleanup operations. That means the exit will be done in a hastly fashion without doing any cleanup or without running any deferred functions or any deferred statments. -

The function takes an integer argument which represents the status code returned to the OS. Conventionally a status code of 0 indicates successful completion, while any non-zero status code indicates an error or abnormal termination. Calling os.exit() will not invoke deferred functions, including those registered using defer.

-

It by-passes the normal defer, panic and recover mechanisms.

-

Practical use cases :

-

Error Handling

-

Termination Conditions

-

Exit Codes

-

Best Practices :

-

Avoid Deferred Actions

-

Status Code

-

Avoid Abusive use

-

We need to avoid deferred actions. Since os.Exit() bypasses deferred actions, to ensure that all necessaru cleanup operations are performed explicitly before calling

os.Exit().

Init function

-

In Go, the

init()function is a special function that can be declared in any package. It's used to perform initialization tasks for the package before it is used. -

Go execute

init()functions automatically when the package is initialized. This happens before the main function is executed. -

init function always gets executed before the main function and it occurs exactly once per package even if the package is imported multiple times. So if we are importing the package in multiple files, it will only happen once.

-

Order of execution : Within a single package, go executes the init functions in the order in which they are declared. If there are multiple init functions, they execute sequentially following their textual order in the package file.

-

Usage: Init function is commonly used for tasks such as initializing variables, performing setup operations, registering components or configurations and initializing state required for the package to function correctly. That's why it's called init function because it is initializing different things.

-

go automatically calls the init() function before executing the main function.

-

Practical Use Cases :

-

Setup Tasks

-

Configuration

-

Registering Components

-

Database Initialization

-

Best Practices:

-

Avoid Side Effects

-

Initialization Order

-

Documentation

Quiz Questions

1. Why does Go reuire a runtime though it compiles to machine code ?

Ans:

2. How does Go Optimized imported packages to reduce the final executable size ?

Ans:

3. Which statment about Go maps is correct ?

Ans:

4. What is a key advanatge of using multiple return values in Go ?

Ans:

5. What is a key rule when defining a variadic function in Go ?

Ans:

Go Programming: Intermediate

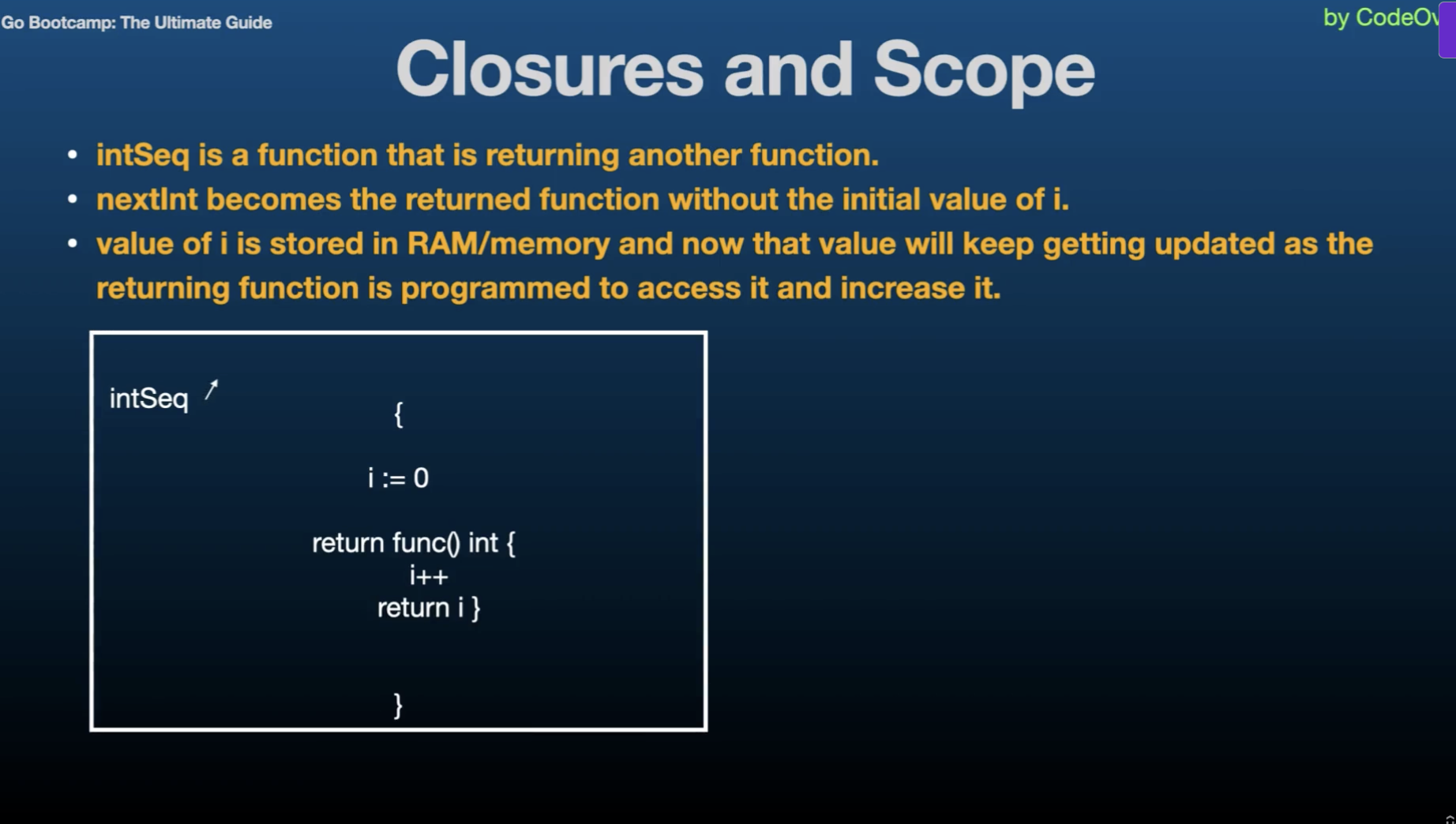

Closures

-

A closure is a function value that references variables from outside it's body. The function may access and assign to the captured variables, and these variables persist as long as closure itself is referenced.

-

Closures work with lexical scoping, meaning they capture variables from their surrounding context where they are defined. This allows closure to access variables even after the outer function has finished execution.

-

Closures leverage the first class objects property of functions by allowing functions to capture and manipulate their surrounding state.

-

Code Summary :

-

i and the gofer assignment zero line and the fmt.Println("Previous values of i:") run everytime you call the adder function, but they only affect the initial state of i. When you create a new closure by calling sequence but they only affect the initial state of i when you create a new closure by calling adder function.

-

Once the closure is created, the subsequent calls to the closure that is, the returned function use and modify the captured value of i.

-

Practical Use Cases

-

Stateful functions

-

Encapsulation

-

Callbacks

-

Usefulness of Closures

-

Encapsulation

-

Flexibility

-

Readability

-

Considerations

-

Memory Usage

-

Concurrency

Best Practices

-

Limit Scope

-

Avoid Overuse

-

Closures are useful for creating functions that maintain state accross multiple calls without exposing the state directly.

-

They help encapsulate functionality and data, allowing for cleaner and more modular code.

-

Closures are commonly used in callback functions, where they capture cariables to provide context or maintain state during asynchronous operations.

-

Closures can keep variables alive longer than expected if they hold references to large objects or resources.

-

Care must be taken when using closures in concurrent programs to avoid race conditions and unintended side effects. That's why it's better to limit the scope. Keep the scope of captured variables enclosures as narrow as possible to minimize unintended side effects.

Recursion

-

Recursion is the process of a function calling itself. It breaks down a problem into smaller sub-problems of the same type until they become simple enough to solve directly.

-

In every recursive function, there is a base case which is a condition where the function stops calling itself and returns a value. Without a base case, the recursion would continue indefinitely, leading to a stack overflow. And apart from the base case we have a recursive case. This is where the function calls itself with a smaller or simpler input to make progress towards the base case.

-

Practical Use cases :

-

Mathematical Algorithms

-

Tree and Graph Traversal

-

Divide and Conquer Algorithms

-

Benefits of Recursion

-

Simplicity

-

Clarity

-

Flexibility

-

Considerations

-

Performance

-

Base Case

Best Practices

-

Testing

-

Optimization

-

Recursive Case

-

Sometimes a recursive solution can be optimized using techniques like memoization. Memoization is caching results of expensive function calls.

Pointers

-

A pointer is a variable that stores the memory address of another variable.

-

Everytime we execute go run, a new executable is made. So Go Run makes a temporary executable each time we execute

go run. -

The zero value of a pointer is

nil -

When we are using pointers, the actual memory address of the variable is passed on to the function. And now the function is accessing the memory address where the number ten is stored by a.

-

Use cases :

-

Modify the value of a variable indirectly

-

Pass large data structures efficiently between functions

-

Manage memory directly for performance reasons.

-

Pointer Declaration and Intialization

-

Declaration Syntax :

var ptr *intptris a pointer to an integer -

Initialization :

var a int = 10 ptr = &aptrnow points to a's memory address -

Pointer Operations: Limited to referencing(

&) and dereferencing(*) -

Nil Pointers

-

Go does not support pointer arithmetic like C or C++

-

Passing Pointers to functions

-

Pointers to Structs

-

Use pointers when a function needs to modify an argument's value

-

unsafe.Pointer(&x)converts the address ofxtounsafe.Pointer -

We will be taking up gRPC and Protocol Buffers and we will be using pointer a lot in Protocol Buffers and gRPC.

-

Go also have an

unsafepackage and go's unsafe package allows low level operations like direct memory access and typecasting useful in certain advanced scenarios. -

In conclusion, understanding and mastering pointers in Go opens doors to more efficient memory management, enhanced control over data structures and access to low level operations when necessary.

String and Runes

-

A string is a sequence of bytes. Bytes are unsigned int8 (

uint8) values. They often represent text. A sequence of bytes represent text. -

Strings are immutable meaning, once created, their values cannot be changed.

-

Strings can be created with double quotes

" "or backticks "`". Backticks are used for raw string literals. In raw string literals the escape sequences will be discarded and everything will be treated like a character and it will print everthing out the way it was written.

message := "Hello\nWorld" // Hello and World on different line

rawMessage := `Hello\nWorld` // Hello\nWorld as output

message2 := "Hello, \rGo!" // Go!lo,

-

\rtakes the cursor to the first position in the line. -

Strings are an array of unicode characters. And these unicode characters i.e. each alphabet that we see, in Go it is called

rune. -

Rune is an integer value that represents a character.

-

Since strings is an array of characters, it also has a length. We can get the length of the string using the same

len()function for arrays, slices. -

All escape sequences are treated as 1 character.

-

When we are using concatenation, we are joining two strings. And when Go is joining two strings, it will not autmatically insert a space between them. It only happens when we are using a print statement and we are using thode different variables seperated by a commas. So it knows, that these are different variables with different content and they could mean different, so that's why it automatically inserts a space between them. But when it comes to concatenation, it knows that we want to join two strings and that's why it doesn't apply any space in between.

-

Lexicographic Comparison : It is a method of comparing sequences such as strings based on the alphabetical order of their components. In Go, lexicographic comparison is used to compare strings. This comparison is essential for sorting, searching and other operations that involve ordering strings.

-

If one string is a prefix of another, the shorter string is considered smaller.

-

The compiler is comparing the ASCII value of the characters.

-

When it comes to string iteration, it's just like iterating over a slice or an array. It will have an index and a value.

-

%xplaceholder/format verb is used to get the hexadecimal value of a character. -

RuneCountInString()-> counts the utf-8 characters in a string. -

Strings a immutable that means, operations like appending, replacing or modifying, require creating new strings. So we have to manipulate strings by creating new strings.

-

We cannot append append more runes, more characters at the end or in the middle or in the begining using any method. So for that we have to create a new string and then perform a concatenation or whatever that we want to manipulate those string variables.

-

A

runeis an alias for int32 and it represents a Unicode code point, a Unicode value. So it is not a character, it is an integer value. A rune is an integer value and that value represents a Unicode code point and that will be converted into a character. -

So runes are used to represent individual characters in a string, and they facilitate working with Unicode characters efficiently.

-

Using Unicde, Go encopassess characters from a lot of languages accross the globe and that makes the jobs of the programmers much easier because we have characters from many, many languages that are used accross the world.

-

A rune is declared with the type as

rune. Runes are declared using single quotes. Double quotes and backticks are for strings.

var ch rune = 'a'

-

Rune literals are single quoted characters representing Unicde code points.

-

Runes facilitate handling of Unicode characters, supporting internationalization and mutilingual text processing.

-

We have support for Smileys in Go language. We can use smileys directly in go as chacters.

-

Strings provide a convenient abstraction for working with textual data, while runes enable precise handling of individual characters and support for diverse languages.

-

Runes and Characters

-

Similarities

- Representing Characters

- Storage Size

-

Differences

- Unicode Support

- Type and Size

- Encoding and Handling

-

Both runes and characters typically occupy a fixed amount of memory. Runes in Go are represented by int32 and represent 4 bytes of memory, allowing them to represent any unicode code point characters. But characters are usually represented by

charwhich typically occupy one byte of memory. -

Runes can represent any unicode code points from ASCII to more complex characters like emojis and non-latin scripts as well. While C also supports characters beyond ASCII through multibyte encodings like utf-8, handling unicode characters directly is not as straightforward as in Go. C libraries and implementations may vary in their support for Unicode.

-

Go natively supports Unicode and provides built-in support for handling runes through it's

runetype and unicode utf-8 package as well. This makes it straight forward to iterate over and manipulate Unicode strings. -

So Go's native support for Unicode and runes make it easier to develop applications that need to handle diverse character sets and languages. So if we are making an appplication where we need to generate text in different languages. Go has a native support for all the languages world-wide.

-

Runes provide a more modern and robust approach for handling Unicode and international text representing Go's design philosophy of simplicity and efficiency in text processing.

Formatting Verbs

-

Go offers many formatting verbs to be used with

printfstatement. -

General Formatting verbs:

-

%v -> Prints the value in the default format

-

%#v -> Prints the value in Go-syntax format

-

%T -> Prints the type of the value

-

%% -> Prints the % sign

-

Integer Formatting Verbs:

-

%b -> Base 2

-

%d -> base 10

-

%+d -> Base 10 and always show sign

-

%o -> Base 8

-

%O -> Base 8 with leading 0o

-

%x -> Base 16, lowercase

-

%X -> Base 16, uppercase

-

%#x -> Base 16 with leading 0x

-

%4d -> Pad with spaces (width 4, right justified)

-

%-4d -> Pad with spaces (width 4, left justified)

-

%04d -> Pad with zeroes (Pads an integer with zeroes to ensure it has minimum width of 4 digits)

-

String Formatting Verbs:

-

%s -> Prints the value as plain string

-

%q -> Prints the value as a double-quoted string

-

%8s -> Prints the value as a plain string (width 8, right justified)

-

%-8s -> Prints the value as a plain string (width 8, left justified)

-

%x -> Prints the value as hex dump of byte values

-

% x -> Prints the value as hex dump of byte values with spaces

-

Boolean Formatting Verbs:

-

%t -> Value of the boolean operator in true or false format (same as using %v)

-

Float Formatting Verbs:

-

%e -> Scientific notation with 'e' as experiment

-

%f -> Decimal point, no exponent

-

%.2f -> Default width, precision 2

-

%6.2f -> Width 6, precision 2

-

%g -> Exponent as needed, only necessary digits

-

Go syntax format refers to format in which values are represented in Go code. For example, strings are enclosed in double quotes.

fmt Package

-

The

fmtpackage includes functions for printing to standard output, returning formatted strings and snaning input. -

Some Key Functions of fmt package :

-

Printing functions

- Print()

- Println()

- Printf()

-

Formatting Functions

- Sprint()

- Sprintf()

- Sprintln()

-

Scanning Functions

- Scan()

- Scanf()

- Scanln()

-

Error Formatting functions

- Error()

-

We hace the

Sprint()function which formats using the default formats for it's operands and returns the resulting string. It doesn't print anything to the console. It only returns the resulting string. -

The formatting functions that we have in Go like Sprint(), Sprintln(), etc., these quite evidently can also be used to concatenate strings.

-

Sprint() does not add a space in between the different values.

-

Sprintln() is a little advanced method which adds spaces in between the arguments and also adds a new line character at the end.

-

Sprintf() function formates according to a format specifier and results the resulting string.

-

fmt pacakge also brings us some functions that can help us take input from the user through the console.

-

The

Scan()function scans the text from the standard input and stores it into the provided variables. So we have to provide a variable for the input to be stored into. -

We have to the direct memory address to scan the input and store it inside the variables passed in the scan function. Because if we pass variables as arguments, the actual variable will not be passes to the function, instead a copy of that variable will be passed, so all the modifications will be done on the copy of the variable and not the actual variable. In order for us to modify the actual variables we give the memory address to

fmt.Scan(). -

Moreover, Scan() has three dots that means it can accept

Variadic Parameters. That means it can be multiple parameters or none. -

Scanln()function is similar to Scan() but it stops scanning at a new line and requires that there be exactly one item per input. -

Scan() does not accepts a blank input

-

Scanf()function scans text from standard input, storing successive space separated values into successive arguments as determined by the format specifier. -

We have to enter our inputs in the exact format that we have declared in the

Scanf()function. -

The

Errorf()function formats according to a format specifier and returns the string as a value that satisfies the error interface. -

If there's an error, first we declare a code block to handle the error and then we move on to handling the value, whatever we want to do with the value.

-

In conclusion, the fmt package is an essential tool for Go developers providing robust functions for formatting and printing text, scanning input and handling errors.

-

In APIs

Sprint()functions are used extensively.

Structs

-

Structs in Go are composite data types that allow you to group together different types of variables under a single name. They are similar to classes in Object Oriented Languages, but they are more lightweight and do not support inheritance.

-

Structs are defined using the

typeandstructkeywords followed by curly braces{}containing a list of fields. -

Fields are defined with a name and a type.

-

Anonymous Structs

-

Anonymous Fields

-

Methods :

func (value/pointer receiver) methodName(arguments, if any ...) <return type, if any> {

// Method implementation

}

-

Method Declaration

-

Value receiver method

func (t Type) methodName() { // Method Implementation } -

Pointer receiver method

func (t *Type) methodName(){ // Method Implementation } -

Comparing Structs

-

Structs can be initialized using a struct literal. We can provide values for individual filed during initialization. As with any variable, if we omit any field in a struct, it will be initialized with it's zero value.

-

Fields are accessed using a dot notation.

-

Similar to functions we also have anonymous structs. These anonymous structs are structs without a predefined type name. They are useful for temporary data structures.

-

Go supports attaching methods to structs. Methods are functions associated with a specific type. Methods are defined with a receiver, which is the struct type upon which the method operates.

-

Always define methods and structs outside the main function. Defining alone a struct inside main is fine but when there are methods associated with that struct inside the program, we cannot define the methods inside main function.

-

Structs and methods must be defined at the package level, not within the functions. It is by design in Go and that is because Go requires types and their associated methods to be declared in the global scope which is accessible throughout the package.

-

Another reason why structs and methods cannot be inside main is because of separation of concerns. Keeping type definitions and methods outside the main function ensures clear separation between data definitions and execution logic. This makes code more readable and more maintainable.

-

We can create instances of structs and we can call methods on those instances inside the main function. And other thing that we can do inside main function is implement our application logic and interact with our structs and their methods.

-

We do not configure the methods inside the structs. So why are methods not inside struct declarations ?

-

the first reason is design philosophy. Go emphasizes simplicity and clear separation between data types and methods. Methods are defined outside of the struct declaration to maintain a clear distinction between data and behaviour. The behaviour is dependent on the methods and data is the data types, the properties of the classes and the structs.

-

However in classes, we have class properties and methods inside the same class. The class properties of classes are like data types and the methods are similar to the methods declared in Go langauge.

-

Another reason is flexibility. Now this approach that we are defining the methods separately from structs, this approach allows methods to be defined for any type, not just structs and facilitates code organization and modularity.

-

To modify struct fields within a method, we use a pointer receiver instead of a value receiver. Pointer receivers allow the method to modify the original struct instance.

-

Pointers make the actual memory address available to the function. But if we are using value receiver it means we are passing the value to a function and it will not modify the original value. So in order to access the original value and modify that, we have to use a pointer.

-

Go supports embedding structs within other structs. This allows for creating a composition of structs.

-

We can define structs with anonymous fields as well. It simplifies the structure definition by promoting the fields of the anonymous struct to the outer struct. In Go, anonymous fieds in struct must be a type. If you declare a field like phone it needs to be of a specific type.

-

Anonymous fields simplify the structure definition by promoting the fields of the anonymous struct to the outer struct.

-

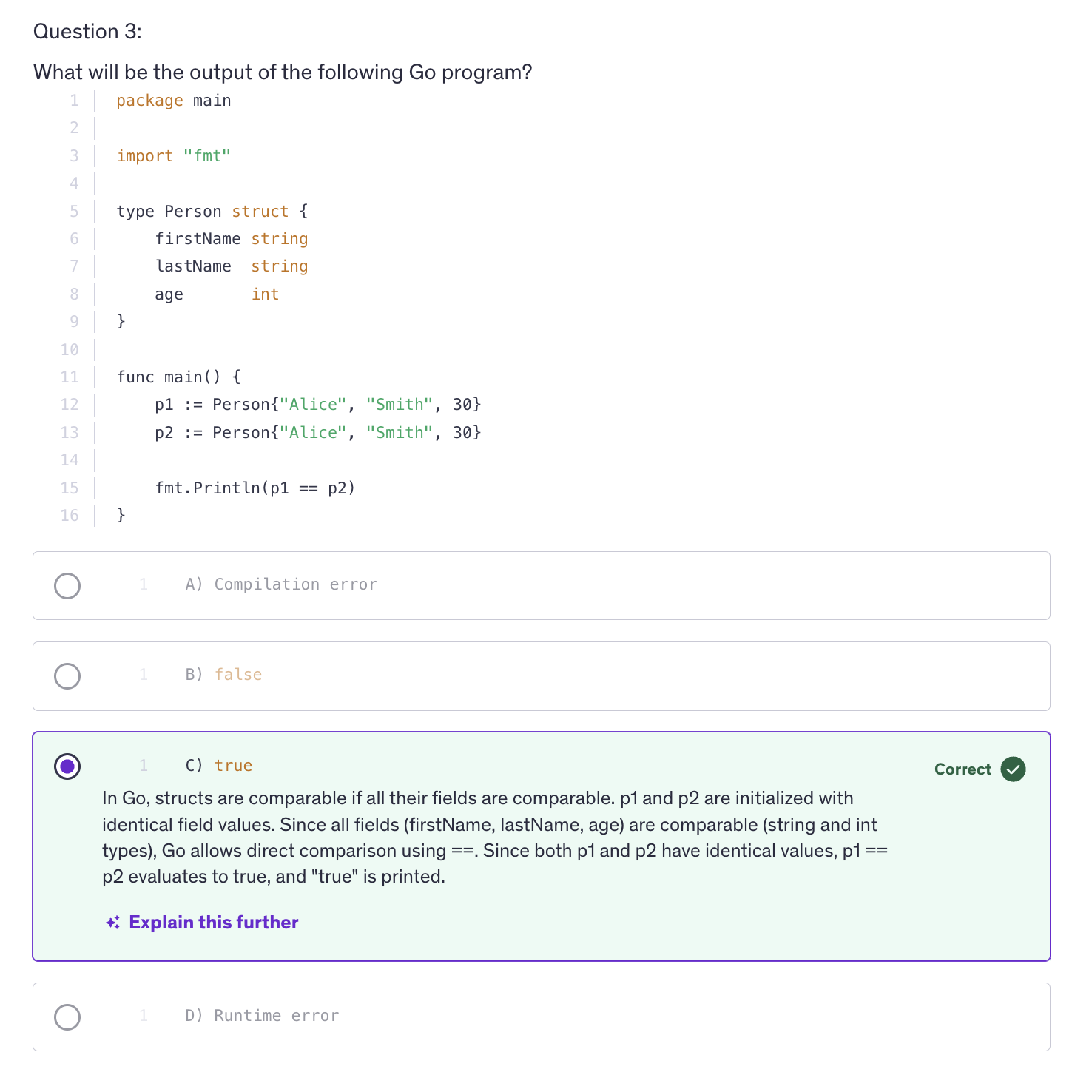

Structs are comparable if all their fields are comparable. You can compare two structs of the same type using the equality operator.

Methods

-

We make methods by declaring a receiver. The receiver will be a struct and that receiver will be associated with that method. So these methods are functions associated with a particular type not necessarily with struct.

-

So methods are not just associated with structs. They can be associated with any specific type. Methods enable us to define behaviors and we define behaviours by using functions. So we define these behaviors that operate on instances of that type.

-

Methods are essential in Go for encapsulating behavior specific to a type and promoting code reuse through structured and organized code.

-

Methods are declared with a receiver which specifies the type that the method operates on and there are two type of receivers in Go : value receivers and pointer receivers.

-

We use a value receiver if the method does not modify the receiver instance. We use a pointer receiver if the methos needs to modify the receiver instance, or if you want to avoid copying large structs because copying large structs means you are occupying a bing chunk in the memory.

-

It's not a thumb rule that you have to create an instance. You can use the type to associate the function with that type to make it a method of that type.

type MyType int

func (MyType) welcomeMessage() string{

return "Welcome to MyType"

}

-

We don't need an instance because we are not accessing any data inside this type. So we need to use instance only if we are using the instance for extracting or modifying the value.

-

Struct embedding allows methods of an embedded structs to be promoted to the outer struct.

Interfaces

-

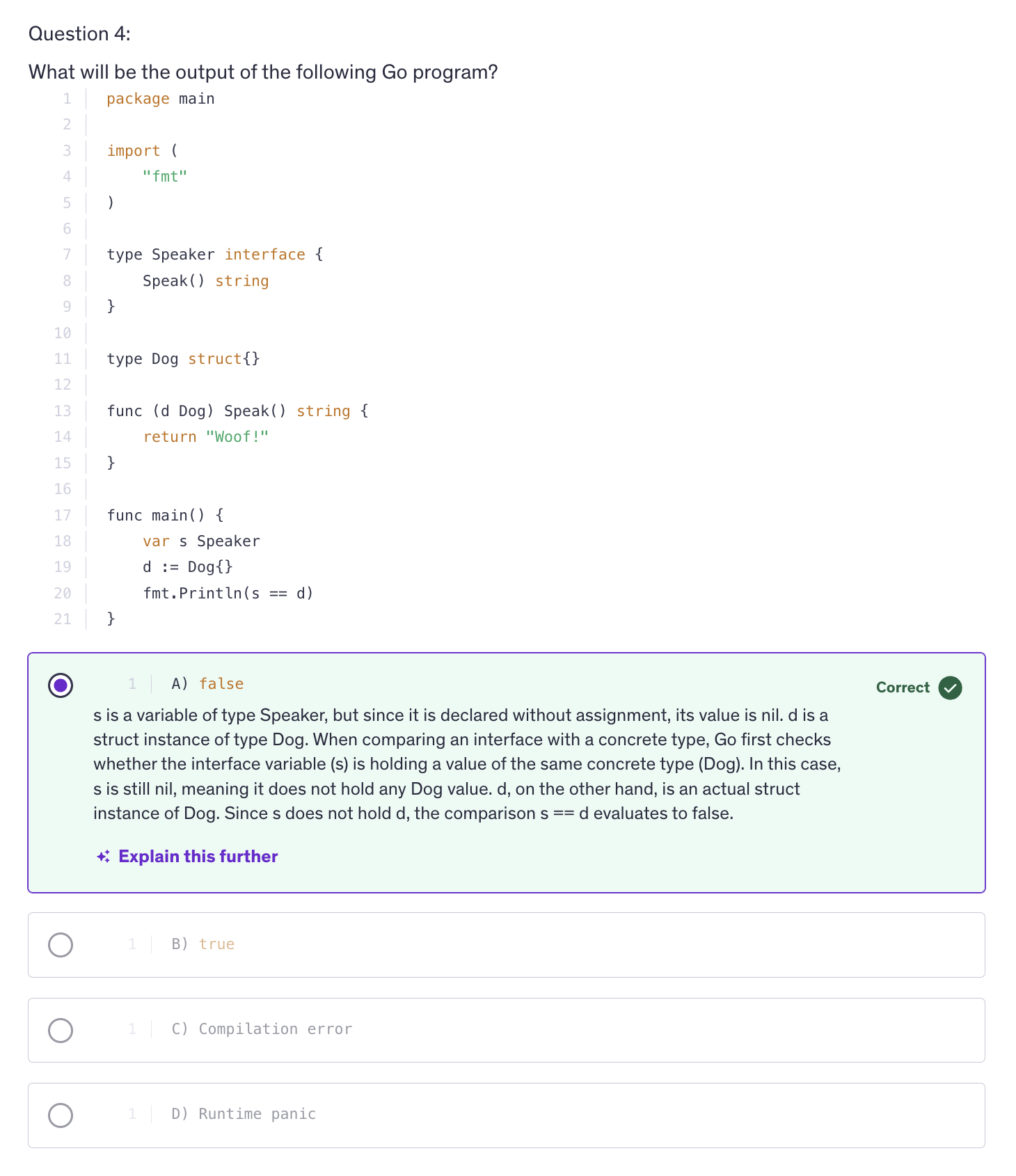

Interfaces promote code reuse, decoupling and polymorphism without relying on explicit inheritance.

-

An interface is declared using the

typekeyword followed by a name and keywordinterfaceand a list of method signatures. -

Interfaces are also declared outside the main function.

-

A type implicitly satisfies an interface if it implements all the methods defined by that interface.

-

Any method or any function that needs to be exported should start with an uppercase letter. So in order for us to export any method, struct or any type we have to name that type with an uppercase alphabet.

-

All a struct needs to do is implement all the methods defined in that interface. All the methods that are defined in an interface should be implemented by that struct to be able to get associated with that interface type.

-

An interface in Go is a way to define a set of methods that other types must implement in order for them to be considered the type that, which the interface if of.

-

anyis an alias of interfaces. -

We can use interface when we are ready to accept any type of value in our function. So if I use a vairadic parameter that means we can accept any number of values of different types.

-

Interface means that you are flexible to any kind of value.

-

Use empty interfaces judiciously typically for scenarios requiring dynamic types or unknown types.

-

Interfaces in Go facilitate Polymorphism and enable writing modular, testable and maintainable by promoting loose coupling between types.

Struct Embedding

-

Struct embedding allows a struct to inherit fields and methods from another struct type. It's a powerful mechanism for code re-use and structuring data.

-

Methods can be overridden by redefining them in the outer struct.

-

Anonymous fields promote all fields and methods of the embedded struct while named fields require accessing fields with their explicit names.

Best Practices and Considerations

- Composition over inheritance

- Avoid Diamonf Problem

- Clarity and Readability

- Initialization

Generics

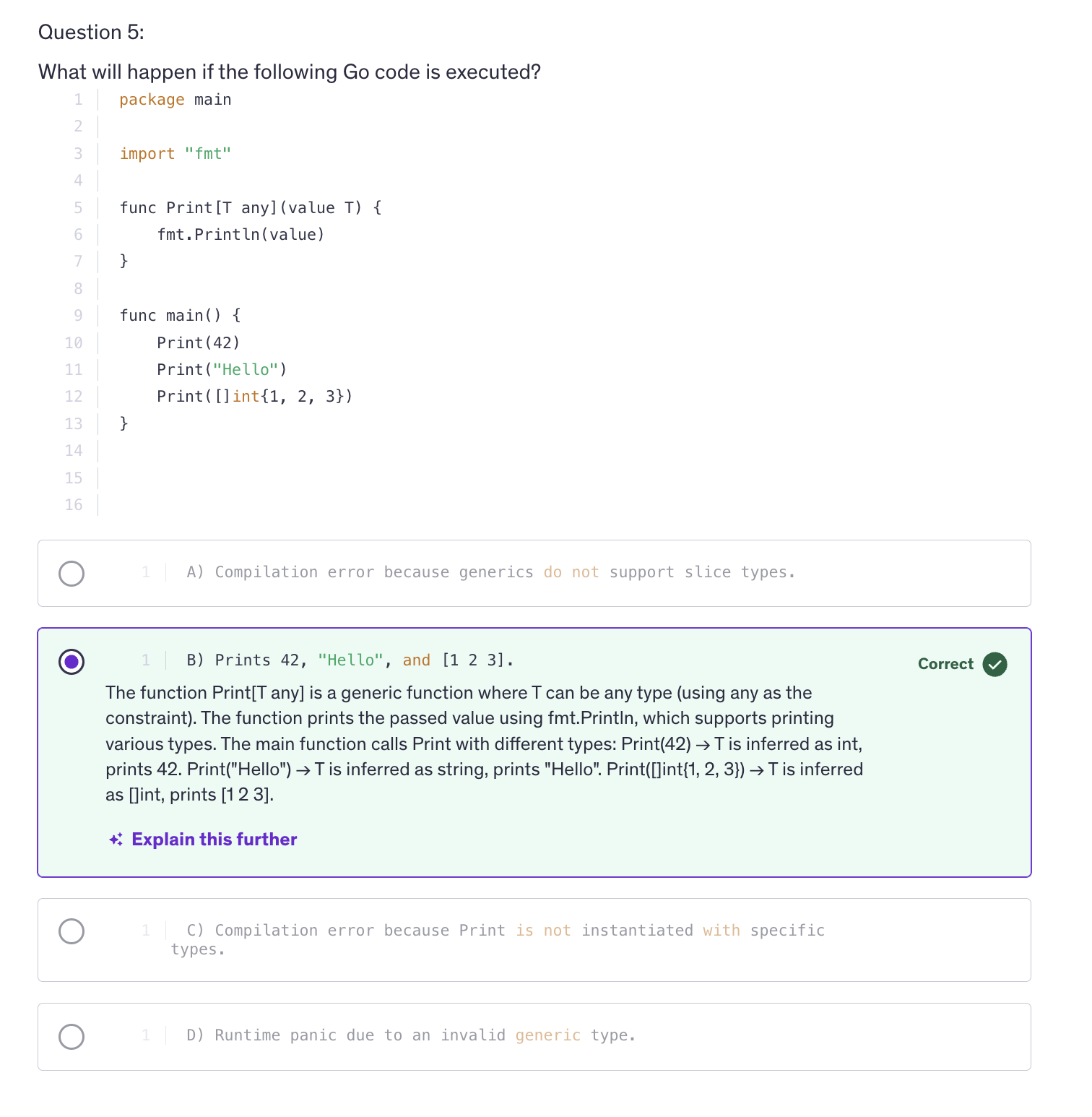

-

Generics in programming languages provide a way to write functions, data structures and algorithm that can handle various types without specifying each type explicitly. This promotes code re-use, type safety and enhances the flexibility of programs.

-

Generics in go are declared using type parameters, which are placeholders for types that can be specified when using the generic function or data structure.

-

anyis just an alias for interface and interface means that it can be of any type. -

Benefits of Generics :

-

Code Reusability

-

Type Safety

-

Performance

-

Considerations

-

Type Constraints

-

Documentation

-

Testing

Intermediate Quiz 1

Errors

-

Errors are a funcdamental part of any programming language, allowing programs to handle exceptional conditions gracefully.

-

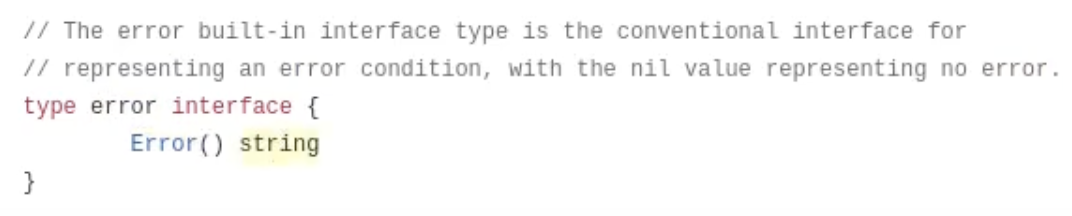

In Go, Errors are represented by the error interface, which is a built-in type used to indicate the presence of an error condition.

-

Errors are typically created by using the

errorspackage or by implementing the error interface. -

Do not unnecessarily use uppercase when naming structs or any other type, always make sure that you use uppercase only when you are exporting your type, your struct or anything else outside the package.

-

Example:

func main() {

if err1 := eprocess(); err1 != nil {

fmt.Println(err1)

return

}

}

type myError struct{

message string

}

func (m *myError) Error() string{

return fmt.Sprintf("Error: %s", m.message)

}

func eprocess() error {

return &myError{"Custom Error Message"}

}

-

We are using Error() because Go has a built-in package. The Go's built in package have an interface which is the error interface. The error interface has a single method which is

Error(). An in Go an error is represented by the error interface, and this error method returns a string that describes the error. -

So by utilizing this interface, we can propagate our custom error messages as we please. Because it is an interface, we can modify it according to our requirements. We can use multiple lines, multiple kinds of data. We can use different kinds of formatting whatever we want because it is an interface. And interfaces are completely blank, all you need to do is implement the methode.

-

Official error interface implementation of Go

-

Error method needs to return a string and that's why our Error() method returned a string.

-

When we are using any function from the built in package it is available to us by default. The built-in pacakage is part of the Go runtime and is special in that it provides the foundations for the language itself. Therefore you can use fundamental types and functions directly in your code.

-

In conclusion, error handling in go revolves around the error interface and idiomatic practices like checking errors, propagating errors and custom error types. Proper error handling ensures that programs are robust and reliable, providing clear feedback on exceptional conditions.

Custom Errors

-

Custom Errors can encapsulate specific details about what went wrong, making it easier to debug and understand the root cause of errors. It provides an enhanced error context.

-

Context and custom errors allow us to distinguish between different types of errors and handle them differently in our application logic.

-

Custom errors also ensures consistency in error handling accross our code base, promoting maintainability.

-

In Go, custom errors are nothing but types that implement the error interface. It requires the implementation of errxor method that returns a string.

-

When we are handling errors, we have to return so that the rest of the statements do not get executed. That's the point of handling the error, right ?

-

Wrapped Errors : Wrapped Errors were introduced after Go version 1.13.

%wformatting verb stands for wrapped error. -

Our custom error helps us to pass on the error message much more efficiently and much better error description from multiple functions that we are executing in a nested way.

-

In conclusion, custom errors in go enhance our error handling by providing more context and differentiation in error reporting.

String Functions

-

Strings in go are a sequence of bytes and Go provides a rich set of built-in functions and methods to manipulate and work with strings effectively.

-

Functions

-

integer to string :

num := 18 str := strconv.Itoa(num) -

string splitting

fruits := "apple,orange,banana" parts = strings.Split(fruits, ",") // ["apple", "orange", "banana"]strings.Split()converts your original string into an array and it divides that string based on the seperator value that you give it.

-

strings.Join()-> concatenates elements of a slice into a single string with a separator.countries := []string{"Germany", "France", "Italy"} joined := strings.Join(countries, ", ")Go is smart enough to add separator only between the consecutive words and not after the last word.

-

Function to check if a string contains a subset characters, it could be one character or multiple characters combined.

strings.Contains(str, "test") // returns true or false. -

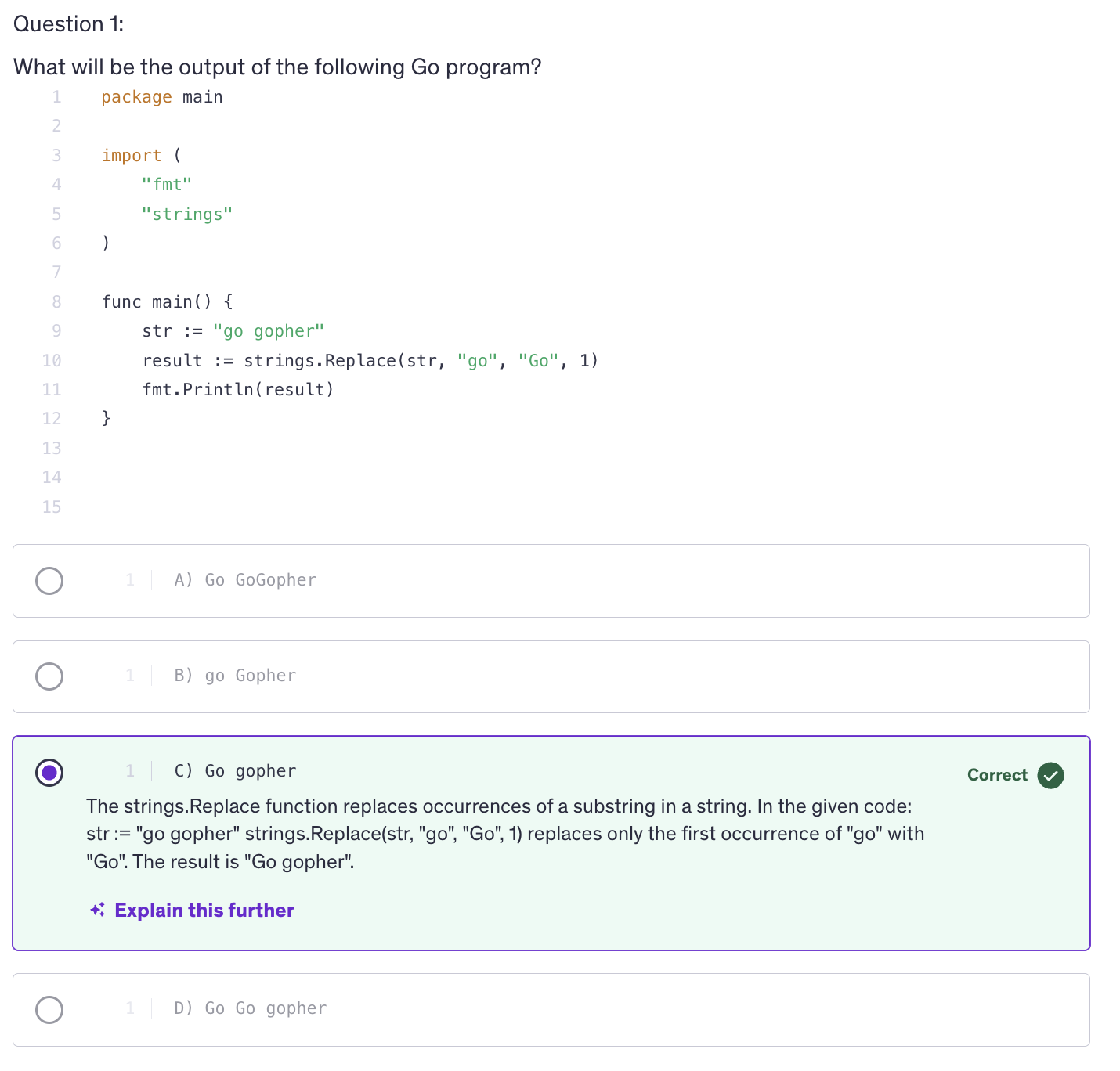

strings.Replace()-> Replaces the occurances of a substring within a string with another substring.strings.Replace(str, <string to be replaced>, <string by which it is replaced>, <no. of occurances to be replaced>) strings.Replace(str, "Go", "World") -

We can also trim leading and trailing whitespace from our string.

strwspace := " Hello Everyone! " fmt.Println(strwspace.TrimSpace(strwspace)) // "Hello Everyone!" -

We can change the case of our strings to lower or to upper during the runtime.

fmt.Println(strings.ToLower(strwspace)) fmt.Println(strings.ToUpper(strwspace)) -

strings.Repeat()-> repeat something for a fixed number of times.fmt.Println(strings.Repeat("foo", 3)) // foofoofoo -

We can also count the occurance of an alphabet or a substring inside another string.

strings.Count("Hello", "l") // 2 -

We can also check for prefix and suffix.

fmt.Println(strings.HasPrefix("Hello", "He")) // truefmt.Println(strings.HasSuffix("Hello", "la")) // false -

Go offers us a regular expression package which allows pattern matching and manipulation of strings based on complex rules.

-

regexpis a package in Go andMustCompile()is a method defined inregexppackage. -

MustCompile() -> is a function that compiles a regular expression. A regular expression is something yes which needs to be compiled.

-

The pattern needs to be inside Backticks. Regular Expressions needs to be enclosed in backticks to be considered a raw string literals. When we are using regular expressions we are defining a pattern and that pattern needs to be matched. So regular expression matches the pattern that we define with different values.

-

d -> digits

- -> it has to be one or more

eg:

\d+-> It has to be one or more digits -> check for multiple digits

str5 := "Hello, 123 Go! 78"

re := regexp.MustCompile(`\d+`)

matches := re.FindAllString(str5, -1)

fmt.Println(matches)

-1 indicates that we are looking for all the matches for that regular expression inside the source string.

FindAllStrings() -> returns an array of strings. It's going to extract all the matches and store them successively in a slice. So it returns a slice of strings. So we have to store the slice in a variable.

-

We have another package which let's us work on Unicode characters and strings and that is called the unicode

utf8package.utf8.RuneCountInStringreturns the number of runes present in the string. -

Since strings are immutable in Go, we have something called

strings.Builder()for efficient string concatenation in performance critical scenarios. -

strings.Builder()is a type in Go's standard library specifically in the strings package that provides efficient strings building. It's designed to help you concatenate strings in a memory efficient wat instead of creating many intermediate strings, which can be expensive in terms of memory and processing time.strings.Builder()allows you to build your final string incrementally. -

strings.Builder() is more efficient than using the concatenation (

+) operator or even when usingfmt.Sprintf()fo concatenating multiple strings. strings.Builder is still much better than these options because it minimizes memory allocations and copies. -

Builder provides several ways for adding content such as write, writeString, writeRune and writeByte. and builder can be used immediately after declaration without initialization.

-

A builder can be reused by calling the reset method which clears it's internal buffer. The final string can be retrieved using the string method.

-

We can keep on building that string. And this builder is memory efficient and prevents memory leaks. It does not make copies. It keeps on building the string in a memory efficient way and in a memory secure way.

-

we have to include character in

builder.WriteRune()and characters are stored in single quotes. -

builder keeps on writing to the existing string that it has stored in its memory. So everything that we write a rune, a string or anything, it will keep on adding to the existing data that it has.

-

So in order for us to start a new string, we need to reset the builder.

-

When it comes to memory efficiency, prefer strings.Builder or bytes.Buffer for building large strings to avoid unnecessary memory allocations.

String Formatting

-

String formatting in Go refers to the techniques used to create formatted output from variables or constants. Go provides several mechanisms for formatting strings, including the fmt package, string interpolation or format specifiers.

-

When it comes to format specifiers, we can use flags or string alignment as well to format our strings in a desired way.

-

Go supports string interpolation using backticks.

-

BAckticks makes a string raw, a raw string literal, which means that it is going to consider everything as a string literal and it's not going to let any escape sequence execute.

-

When you need to embed special characters or multiple lines of text without interpreting escape sequences, backticks are very useful. This is particularly handy when dealing with regular expressions. This improves readability and reduces the chances of errors due to missed escape sequences.

-

Another use case would be when we are using SQL query. So in SQL queries using backticks ensures that the query remains intact without needing to escape special characters or worry about line breaks. It enhances readability and reduces the cognitive load when writing or maintaining such code.

Text Templates

-

Text templates in go are a powerful feature that allow you to define and execute templates for generating text output. They are particulary used when you need to generated structured texts wuch as HTML, JSON, SQL Queries or any other formatted text output.

-

A template is a string or a file that contains one or more action sequences. These actions control the template execution, such as inserting values, iterating over data or executing conditionals.

-

Concept of Actions: These actions are enclosed in double curly braces. There are several types of actions like variable insertion.

-

Variable Insertion: [{.FieldName}]

-

Pipelines: {{functionName .FieldName}}

-

Control Structures: {{if .Condition}} ... {{else}} ... {{end}}

-

Iteration: {{range .Slice}} ... {{end}}

-

Advanced Features

-

Nested Templates: {{template "name" .}}

-

Functions

-

Custom Delimiters

-

Error Handling: template.Must()

-

Use Cases

-

HTML Generation

-

Email Templates

-

Code Generation

-

Document Generation

Best Practices

-

Separation of Concerns

-

Efficiency

-

Security

-

Templates are executed by applying them to data structures known as Template Data. These data structures can be simple values, structs, slices, maps or any custom types that you define.

-

Templates are a part of 2 packages:

-

We have text template as well as html template package. HTML template package has some advanced features that text template package does not have. Text template package has basic features of templating.

-

Once we have create a template, we have to parse the template, we have to process that template. We use

.Parse()method. It takes an argument which is a string, but this string is not a usual string. This is actually a string that we want to be processed as a template. -

Template is something that we can reuse repeatedly for different values, and the name is going to be changing everytime we use this template.

-

To output the message from the template use

.Execute()function on that template. It will return an error if there is one so make sure to handle the error in a variable.

err := tmpl.Execute(os.Stdout, data)

-

Execute takes the first argument as the target, the destination where it needs to send the output to. So we are sending our output to the standard output device of our computer which is the console. And the next argument is the data.

-

There's another way of using template via using

template.Must()where we don't have to handle the errors ourselves and template.Must() will automatically panic if we have an error while parsing our template.

tmpl := template.Must(template.New("example").Parse("Welcome, {{.name}}! How are you doing?\n"))

this code is equivalent to :

tmpl, err := template.New("example").Parse("Welcome, {{.name}}! How are you doing?\n")

if err != nil {

panic(err)

}

-

.Must() is used to handle the error from the parse. And we'll not have to handle the error ourselves, which will save us some more typing and which will make our code mmore readable.

-

template.New() -> creates a new template and it takes the name of the template as an argument.

-

template.Parse() -> parses the template definition. So it takes a string and that string is in the format of template that we want. And then template.Parse will process the string and convert it into a template that we will use further in our program. So

template.Parse()helps us to parse the template string and it turns the string into a reusable template object that can be executed with custom data. -

template.Execute()-> used to apply a parsed template to a data structure and write the result to an output destination. It could be a file or it could be a console or something else. -

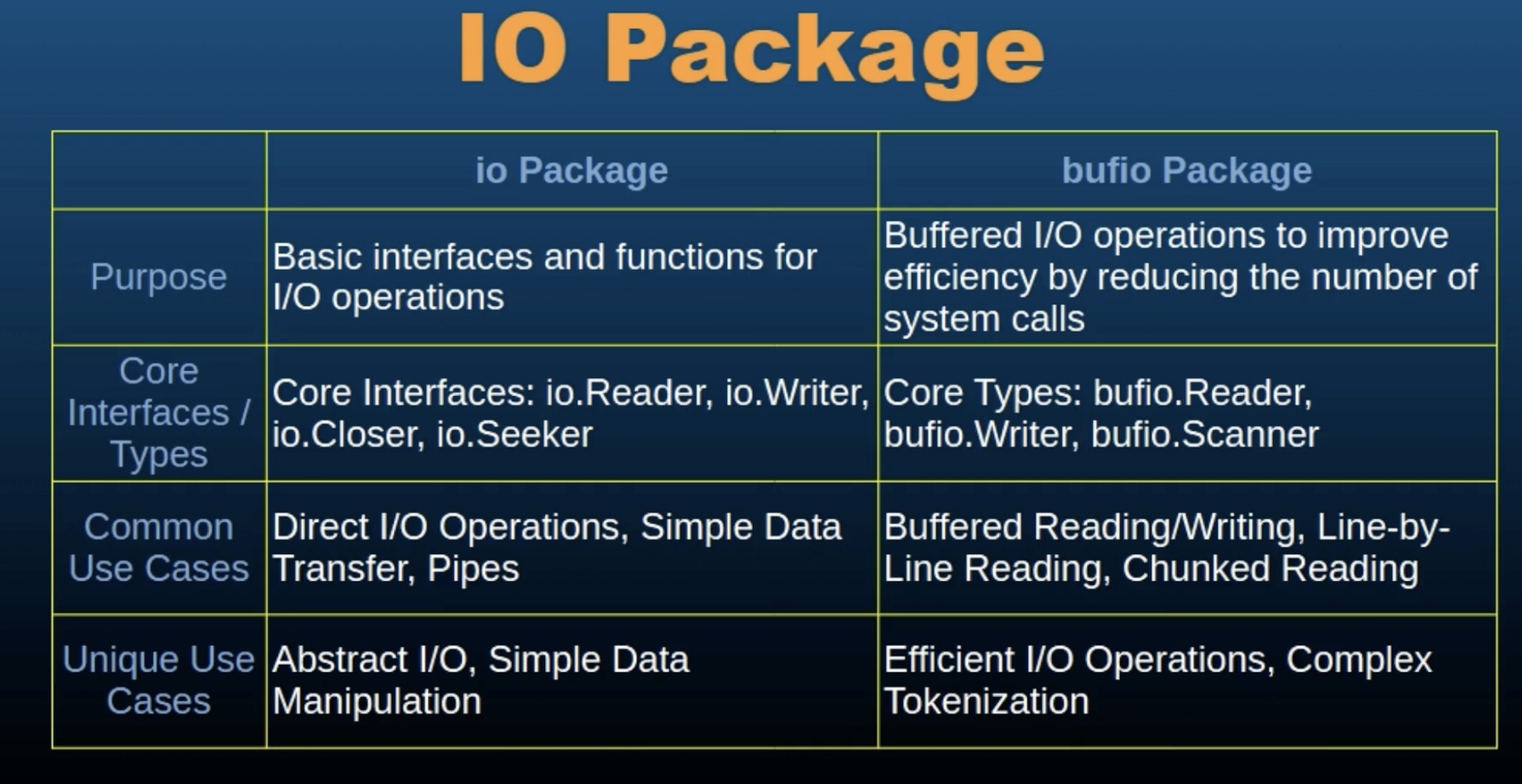

bufio-> Buffered input output package. -

bufio.NewReader(os.Stdin)-> to read from the console. Console is the standard input device. -

to get the input from the user :

reader := bufio.NewReader(os.Stdin)

- .ReadString() -> takes an argument as a delimeter. So that means it is going to accept the input from the console until it reads the delimeter from the console. It takes a

bytestype as an argument, that's why we have to use single quotes.

reader.ReadString('\n')

ReadString()-> generates a string and an error so handle both of them by storing them in the variable. Always read strings from the console and then convert them to whatever you want.

Best Practices

-

Separation Of Concerns: Keep your templates focused on presentation logic avoiding business logic.

-

Precompile you templates for re-use if performance is critical to your application.

-

Also sanitize inputs to prevent injection attacks, especially when generating HTML because there are a lot of attacks which happen using the user input.

-

Overall, text templates in Go are a powerful tool for generating textual output based on structured templates and dynamic data. They offer flexibility, ease of use and support for complex scenarios like conditional logic, iteration and function invocation within templates whether for web applications, system administration scrips or data processing tasks.

Regular Expressions

-

Regular expressions provide a powerful way to search, manipulate and validate text strings based on patterns. They are widely used in text processing, searching and data extraction tasks where patterns of characters need to be matched or manipulated.

-

In Go, the regular expression package provides support for working with regular expressions.

-

To work with the regex pattern in Go, you first compile it using the

regexp.Compile()orregexp.MustCompile(). -

regular expression to match email address

re := regexp.MustCompile(`[a-zA-Z0-9._+%-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}`)

-

The

+means that anything inside the bracket is going to have multiple notable occurances. -

A period is declared with a backslash because it is outside the square brackets.

\. -

To limit the occurances use

{}. eg:{2,}means two or more occurances. -

The hyphen

-has a special meaning. And meaning is a range. So if we are not using hyphen as a range, it needs to come as the last character to let the compiler know that we are not using hyphen as a range, but as a symbol, as an acceptable character. that's why[+-%]is invalid and[+%-]is valid regexp. -

Regular expressions allow capturing parts of the matched strings using parentheses. Parentheses create capturing groups which allow us to extract parts of the complete matched string.

-

Regex to capture dates

re := regex.MustCompile(`(\d{4})-(\d{2})-(\d{2})`)

-

There are many regular expression generators online for such tasks.

-

We can also replace characters in our target string.

-

Flags and Options:

-

i-> case insensitive. we are accepting characters in all cases. -

m-> multi-line model. -

s-> dot matches all -

?i-> Flag which makes the regular expression case insensitive. WHen we are using Flags we need to make sure that those flags start with a question mark. This is how regular expressions accept flags. -